This user's guide is still to be written, however here are a few hints about OSDL's features

One may refer to the OSDL Developer guide for further informations.

See also : Ceylan user's guide for common informations.

|

Note : this documentation is quite deprecated, it would need some serious update and rewriting.

|

You can notably install OSDL with three methods, the first being dull and risky, the second being recommended, the third being still to be written.

One have to compile from sources all OSDL-0.4's pre-requesites.

As you can see, the list if far too long to be pleasant, we therefore set up an automatic tool that manages the whole build process on your behalf : LOANI.

Go boldy where no one has gone before, fasten your seat-belt, and use LOANI (do not be too anxious, since LOANI has been stable for a while !) [jump to LOANI's quickstart]

As soon as OSDL will be widespread enough so that its main audience will not be OSDL developers but OSDL users, a set of binaries will be released for each and every supported platform, including various GNU/Linux distributions (Debian [.deb], Gentoo [emerge], Fedora Core [rpm]).

For the moment, it is far more convenient to let LOANI handle that, and to rebuild everything from source automatically.

The screen coordinates are measured the following way :

Therefore the origin (point whose coordinates on the screen are [0,0]) is to be found in the upper left corner of the screen, and the third coordinate, corresponding to the z-axis (perpendicular to the plane containing the screen) would be going from the viewer's eyes to the screen.

Many low-level OSDL's graphic-drawing primitive does not lock the target surface for you. The reason for that is the cost of locking a surface, which can be heavily reduced depending on user specific need. The usual scheme should be : lock the surface, do all the drawing you can, and then unlock the surface. The more fine the locking granularity will get, the more locks during each frame your program will have to gain, the slower it will end up. As OSDL cannot guess for you what are your locking needs, it lets you manage it, and always suppose you did right : OSDL uses the surfaces with such primitives has if it was locked, be it true or not.

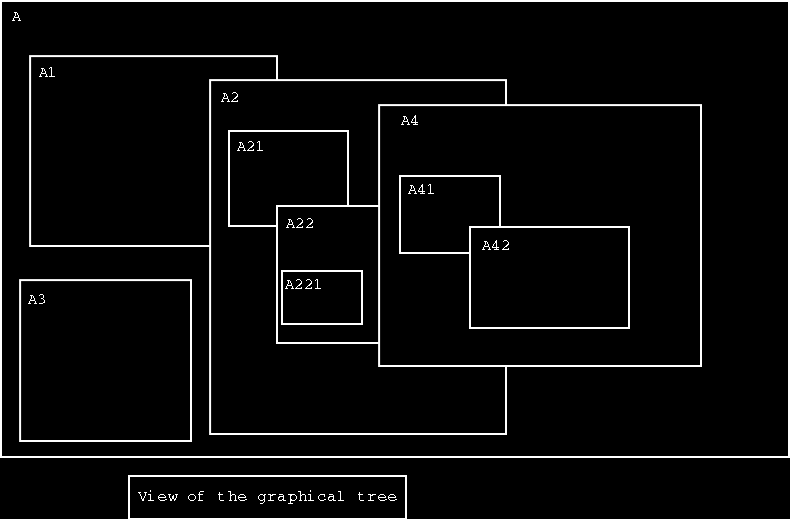

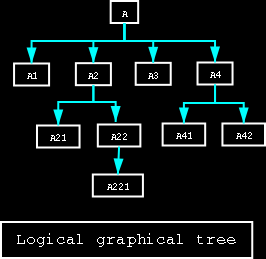

The overall objective of this framework is to allow to draw efficiently surfaces with an arbitrary level of compositing widgets. Widgets being themselves surfaces, they can contain widgets, and so on. Finally, the Surface-Widget framework is dealing with trees of graphical elements, as shown below.

The key point is that the internal video buffer of a graphical element, when up-to-date, should display both its own internal video buffer and the ones of all the widgets of its subtree, blitted in correct order, to their correct location.

From this view the following tree, focusing on containing relationships, can be deduced :

We can see that when a graphical element is contained by another, it is a child of its container. Except A, which is a simple Surface, all the nodes are Widgets (thus, also, Surfaces through inheritance : Widgets are merely Surfaces having a container).

Children of a node are actually sorted in a list, their order being from bottom to top. For example, A21 shows up before A22 in the list of A2, since A21 is below A22 (closest to the container's back).

Our goal is to manage the display of such a graphical tree, aiming at redrawing a reasonably small subset of the graphical elements when one or more of them have changed.

The relationship between nodes being container-based, the surface owned by each node is able to contain all graphical view of the elements of its node subtree.

To manage the rendering, the whole point is to walk carefully the graphical tree so that widgets are redrawn in the correct order, which is from bottom to top, since they are likely to overlap. The correct algorithm is a depth-first walk of the tree : at each node, one has at first to deal with the background of the widget, then with the whole subtree of its first child (the child that is closest to the back), then with the whole subtree of its second child, and so on.

To do so, we defined the needsRedraw boolean attribute for each graphical element (a node of the tree, hence a Surface, be it specifically a Widget or not). This attribute tells whether this node and its subtree are up-to-date. This information is to be propagated from any node that changed, up to the root of the tree, so that unchanged subtrees can be entirely skipped during the redraw phase : a node whose needsRedraw attribute is false should have its whole subtree unchanged, therefore its internal video buffer must be up-to-date.

Let's see the algorithm described in pseudo-code :

class Surface

{

method redraw()

{

if ( needsRedraw )

{

redrawInternal() ;

for c in myWidgets()

c.redraw() ;

needsRedraw = false ;

}

}

}

class Widget : Surface

{

method redraw()

{

Surface::redraw() ;

BlitTo( getContainer(), getMyVideoBuffer() ) ;

}

}

In a few words, a Surface will redraw itself and then its widgets in correct order if and only if its needsRedraw attribute is true. As for a Widget, it will do as a Surface, except that when it will be up-to-date, it will blit its internal buffer onto the one of its container.

The actual implementation of the algorithms relies on Ceylan's event management scheme so that widgets, listening to their container, are informed thanks to event that their redraw has to be performed.

Please note that the redrawInternal method, which does nothing by default, is meant to be overriden and to allow a graphical element to repaint itself. For example, for a Surface, this operation could be, if needed, its filling with a background color or the blitting of an element of an image repository into the video buffer of this surface, so that it acts as a background. For a widget, the method could render the current time on the buffer, and so on.

For more complex cases, the widget may rely on another attribute, such as a boolean changed, to record whether its view shall be recomputed or not. If true, then the view could be regenerated into a back buffer, and then blit onto the widget video buffer. If changed were false, then the previously rendered back-buffer could be safely used again and blitted. It would allow to trigger the widget rendering (which is potentially demanding in resources) only when appropriate.

Let's suppose that, upon creation, surfaces and widgets have their needsRedraw attribute set to true, so that at the first redraw request they get repainted. Then we can start from a situation where everything is up-to-date before the first change occurs.

As long as all graphical elements remain as they are, surface A has its needsRedraw attribute set to false, therefore A.redraw() does nothing. If A is a screen surface, then calling A.update() will normally blit this surface to video memory, if needed, and it will be displayed. Otherwise, if A is not a screen surface, it will remain unchanged.

Let's suppose now that widget A22 is to change (for example, it is a clock and the displayed minute is to be incremented), and let's see how it should be handled.

First of all, each time a frame is being drawn, the overall surface A will be asked to redraw itself, thanks to A.redraw(). Therefore A must have been informed in some way that one of its sub-elements, namely A22 has changed, in order to take that into account : only container knows how to redraw their elements, with respect to their stacking order. To do so, the simplier is to have A22 set its needsRedraw attribute to true, and trigger too its propagation from container to container until it reaches the root of the tree, A. Consequently, A22, A2 and A have their needsRedraw boolean set to true. This do not trigger the whole redrawing of the tree, since many widgets may change between two overall redraws, which would lead to useless redraws between two frames : better have it rendered just before it is needed, when all states should be final.

When the time comes for A to be actually redrawn (because its attribute needsRedraw is true), many nodes will have to be redrawn, because when a node changes, it will have to be repainted, and also all widgets that may overlap, that is, all widgets which come after in the depth-first tree walk, starting from its remaining brother nodes.

A has at first to redraw itself (its own background thanks to redrawInternal) since its needsRedraw attribute is true. Then it will have to iterate through its ordered children list, starting thus by A1.

If a node has its needsRedraw attribute set to false, then it can be safely assumed that none of its subnodes have changed, and therefore that its internal video buffer (containing a view of its full widget hierarchy) is up-to-date. Hence its video buffer just has to be blitted onto the one of its container.

As in our example node A needs redraw, and A1 do not, the precomputed A1 video buffer must be up-to-date, and it can be safely blitted onto the newly redrawn background of A.

The A1 subtree being completed, this is the turn of A2, which has its needsRedraw attribute set to true, as mentioned earlier. A21 must react for A2 as A1 reacted for A : it can be directly blitted. As for A22, its needsRedraw attribute is true too, it means that it must be repainted and that each of its children must be redrawn in turn and copied back to its buffer.

Thanks to this approach, the number of redraws should be far lower than the brute-force repainting of all widgets each time one of them changes : our worst case is all the widgets being repainted at each frame.

However, overlapping considerations could be taken into account to further reduce the widget repaintings. It would be nevertheless a complex algorithm with potentially multiple scans of the graphical tree to determine whether a given widget is overlapped by front widgets. For the moment, no such need appeared in our projects, since few widgets are commonly used for multimedia applications and games.

First, a basic understanding of the Ceylan MVC framework is requested. Please report to Ceylan user's guide if necessary.

At the core of the system is the OSDL scheduler. When it is used, it is working at relatively high frequencies, by default 1 KHz (1000 Hz). When the best-effort basis scheme is selected (as opposed to the non-interactibe deadline-free mode, called also the screenshot mode), the scheduler does it best to enforce three frequencies :

These frequencies are expressed relatively to the real time, so that the virtual time is kept synchronous with the user time. As the vast majority of systems are soft real-time, the best effort scheme cannot enfore strict frequencies, and usually deadlines, be it input, simulation or rendering, are missed, often because of time slices being given to other processes by the operating system.

When an input skip occurs, by default nothing is done, since next input tick will have to read all events of the queue, beginning by the ones that were not read because of the skip. If latency is deemed too high, the Scheduler::onInputSkipped method can be overriden so that inputs are read nevertheless as soon as possible after the missed input deadline, thanks to a call to Scheduler::scheduleInput.

When a simulation tick or a rendering tick is missed, the relevant method (respectively onSimulationSkipped or onRenderingSkipped) is called. They can be overriden so that some counter-measures are taken. One should ensure that on average these correcting tasks are quicker to perform than the nominal ones, lest the scheduler cannot keep the pace with the real time.

When the corresponding deadline is met, the scheduler increments the current input tick and reads all queued events from the back-end input queue (typically, SDL). Each event is decoded and sent to the relevant input device (keyboard, mouse or joystick). Depending on a MVC controller being linked to the input device, the controller state is updated.

For some kinds of events (ex : key presses), if no controller is registered for this event, an event handler is searched, and triggered with that event if matching. The difference with a controller is that handlers behave like functions uncoupled from all frameworks, including MVC.

For example, a key responsible for the fullscreen toggle should not be managed by a controller, since the MVC framework is meant to apply to actual game objects, not to contextual settings. This key should be managed instead by a dedicated handler.

At the end of the input polling step, all pending low-level events should be taken into account, all handlers should have been triggered and all controllers should have been updated if needed. This phase is event-driven, which means a controller (or an handler) will not be activated unless targeted by a specific event. No model or view is therefore taken into account during hte input step.

Logic is managed during simulation ticks, which are and must be the only unit of time used in the virtual world. Objects that can be scheduled are called Active Objects, and they can be scheduled for simulation according to two non-exclusive schemes :

All kinds of objects can be scheduled, as soon as they need to live on their own, i.e. without being triggered by anything specific. Among all these active objects, a specialized form exists, called Model, the M of the MVC framework. Model encapsulates the state of an active world object which may be linked to controller(s) and/or to views.

Only the model is activated during the simulation tick, but to compute its new state it may need inputs from its controller(s). Whereas the input step updated all controllers, the simulation step (all steps being uncoupled on purpose) updates the models, which may have to read some of their inputs from controllers. Note therefore that here the controller-model pair is driven by the model : another scheme could be event-driven, i.e. low level events coming from the input layer could trigger the update of controllers, which in turn trigger the update of models, views, etc.

The simulation tick is typically the place where physics take place in a game.

The rendering tick is the place where the outline of the virtual world is built for the user. Based on the available description of the world, its purpose is to generate a view, principally video and audio, given to the user. To do so, a dedicated module, the root renderer, is used. This root renderer must take in charge everything, by doing it itself or, generally, by delegating it to specialized sub-renderers, usually purely audio or purely video.

To render the world, Views, the V of the MVC framework, are taken into account. Most of the time, one cannot render a full scene by just iterating into the views and asking them to render themselves in turn. Usually, the scene should be organized, for example in a BSP tree, so that the proper drawings (for the video) are performed. It is up to the (here, video) renderer to choose the relevant approach.

Where does the AI thinking should take place ? The two candidates are obviously the simulation step (since simulating, say, a wolf, could include guessing what it decides) and the input step (in this case, the model of the wodel describes only its body, and this particular wolf, that could be controlled by, say, a joystick, happens to be controlled by a program).

Whereas it may seem more logical to prefer the second way (all wolves are treated similarly, be they human-controlled or programatically controlled), it may cause some issues since usually input ticks occur at lower frequencies than simulation tick. From a scheduling point of view, AI and models may behave very similarly. It finally ends up in a familiar discuss : is the thinking a pure product of the body (AI in model), or is the soul fundamentally different from its physical support (AI in controller) ?

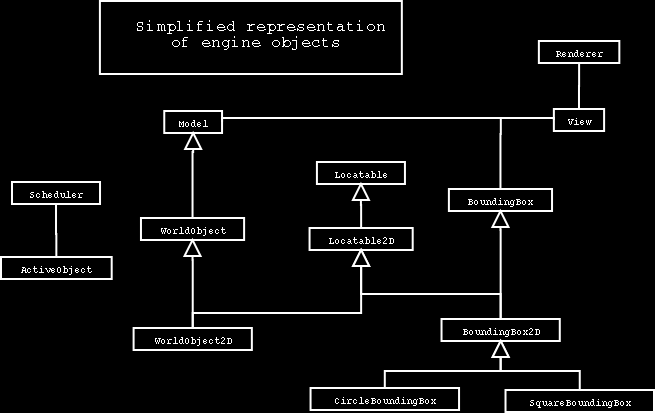

Simply put, objects can be living or not, independantly from having any kind of representation in the world. These objects are active objects (OSDL::Engine::ActiveObject), and are to be managed by the scheduler (OSDL::Engine::Scheduler). Active objects can be completely abstract, such as the remaining time before the game state is saved.

Completely unrelated to being active or not, objects can have some interactions with the world. These are world objects (OSDL::Engine::WorldObject), which means they are Ceylan::Model instances in the MVC framework. So they are taken into account in two ways : when they impact the world, thanks to their Ceylan::View instances (if any), and when the world impacts them, thanks to their Ceylan::Controller instances (if any).

This does not imply in any way that these world objects are active. For example, a stone can be perceived by any observer (thanks to its View, be it visual, olfactive, sound, etc.), and someone could throw them (thanks to their Controller). This does not mean that the stone thinks, i.e. needs dedicated processing power in any way. As such, a stone should not be an active object. On the contrary, a bird might be both a world object and an active object, since it clearly belongs to the world (one can view, smell, hear or touch it for example), and it is clearly not passive (it can fly according to its own will, therefore it may need processing power regularly).

World objects are all locatables (Ceylan::Maths::Linear::Locatable) in some way. It means that, depending on the space they are to be in (2D, 3D), they will respect the corresponding locatable inheritance. For example, an object in a 2D world will be an OSDL::Engine::WorldObject2D, which means it will be both a WorldObject and a Locatable2D.

Locatables allow to manage the position and angle(s) of rigid-body objects in space. This is done thanks to an homogeneous matrix which represents the geometrical transformation which defines their position and angle(s) relatively to their father locatable (if any). For example, the arm of a 3D robot will be a WorldObject3D, i.e. a world object being also a Locatable3D, which embeds a 4x4 homogeneous matrix. The father locatable of the arm might be the torso of the robot, which in turn have a father locatable and so on.

As world objects can be complex, they all have at least one bounding box, which is a simpler abstract object which contains them as a whole, and whose shape allows easier computations. This world object-linked bounding box is itself a Locatable (for our robot arm, it would be Ceylan::Maths::Linear::Locatable3D), and its shape could be a sphere. This box is defined relatively to its object, so the later is the father locatable of the former.

Bounding boxes are particuler insofar as they are used both by the model (ex : to detect collisions) and by most views (ex : to know whether the object is visible), whereas model and views are as much as possible uncoupled (ex : they might live on different computers, models on a server and views on clients). For local executions, the bounding boxes will be shared between model and views, whereas on a distributed context they will be as many replications as processes involved.

If one wants to render a non-interactive session of the simulated world into a MPEG file, then use Events::EventsModule::setScreenshotMode. It will generate a PNG file for each frame demanded by the user-specified framerate. Then the PNG files can be gathered into a MPEG file thanks to mencoder.

mencoder "mf://*.png" -mf fps=25 -o output.avi -ovc xvidmencoder -of mpeg -ovc lavc -lavcopts vcodec=mpeg1video -oac copy "mf://*.png" -mf fps=25 -o output.mpegHere is a simple makefile which allows to generate automatically a MPEG movie out of the generated PNG files :

|

modprobe joydev (you should trigger the use of an object file joydev.o, see for example /lib/modules/2.x.y-z/kernel/drivers/input/joydev.o). Use our joystick test (under $OSDL_ROOT/bin/events/testOSDLJoystick) to check whether the joystick(s) is/are correctly detected, including relevant axes, hats, balls and buttons.

error while loading shared libraries: libXXX: cannot open shared object file: No such file or directory

SDL_image. Failing to load libraries occurs often when LD_LIBRARY_PATH is not set correctly. Didn't you forget to issue your source ${LOANI_ROOT}/LOANI-installations/OSDL-environment.sh ?

Aborted and that's allthrow statement in this signature, then the exception must be listed by it. Failing to do so leads to only an Aborted (or one of its translated versions, such as Abandon in French) to be written. Using the debugger (gdb) with : gdb myProgram, then run, then where allows to see in the backtrace which succession of calls stumbled in an unexpected exception. The code just has to be corrected afterwards. gdb mail fail at startup nevertheless, see next pieces of advice in this case.

gdb, I got : BFD: BFD 2.15.93 20041018 internal error, aborting at cache.c line 495 in bfd_cache_lookup_workermake all was issued while still in gdb) or when the current directory used when launching gdb was deleted (ex : a make clean was issued while still in gdb). The solution is to quit gdb, check that the current directory still exists, and reset it, possibly thanks to a cd `pwd`.

If you are contributing from a LOANI-installed version of Ceylan or OSDL, and if you did not select the --currentCVS LOANI option, then the versions you downloaded correspond to the latest stable release, not the one that was up-to-date at that time. This is done that way so that developers are able to modify files at any time and possibily have a broken latest CVS, whereas end-users can still use LOANI to retrieve a fully fonctional version.

To achieve this feature, the stable versions came thanks to a tagged CVS checkout. One ought to know that CVS tags are sticky (see here for more details), with means that, unless a special CVS command is used, all versions remain with this tag. Therefore they won't be updated as usual in neither way : if you modify them locally, they won't be put in the repository, and if the repository changes they won't be updated locally. The only way of letting them change is to remove that sticky tag, thanks to the cvs update -A command, wich operates recursively.

If you have informations more detailed or more recent than those presented in this document, if you noticed errors, neglects or points insufficiently discussed, drop us a line!